Concept

We warmly recommend watching this insightful presentation by our Architect @Asmodat to explore KIRA's unique approach to General-Purpose Rollups.

High-performance Applications With Blockchain Systems

Bandwidth as the Ultimate Bottleneck for Scalability

Blockchains are specific implementations of the broader concept of verifiable general computation, a principle that ensures the integrity of a computation’s execution without relying on trusted hardware. In a process referred to as consensus, blockchains verify computation through naive re-execution, where multiple computers (nodes) collectively execute the same computation with identical sequence of ordered inputs. These nodes then agree on the output (also referred as the state of the the blockchain application), ensuring it is identical across all nodes. Once consensus is reached, the ordered inputs are batched into so called ‘block’ and saved (or ‘chained’) to the blockchain in a way that makes them resistant to re-organization and thus achieving finality. However, while this consensus process guarantees integrity, it also introduces a significant scalability bottleneck: As the number of nodes or the computational workload increases, the time required for all nodes to reach consensus on the outputs grows. Therefore, the maximum achievable throughput (rate of processing transactions) decreases, which ultimately limits the diversity and complexity of applications that can be executed.

This scalability issue was initially formulated by Zamfir's impossibility conjecture which posits that no distributed system can simultaneously achieve a high node count (decentralization), fast finality (time required for immutable transaction history post-consensus), and low protocol overhead (necessary data to be transferred for consensus). This hypothesis, while not formally proven, is viewed as a reinterpretation of the CAP theorem. In essence, the consensus is inherently constrained by the hardware performance of the most resource-limited nodes of the supermajority and the fundamental bandwidth limitation: the immutable speed of light, which sets an upper limit to the rate of data transmission over fiber optic cables. While both factors influence the system's performance, the latter presents a more significant long-term challenge. This is because the overhead of the consensus protocol, while increasing linearly with the volume of data, grows exponentially with the number of nodes. This growth happens independently of the hardware capabilities of individual nodes. Thus, if decentralization is a cardinal requirement, bandwidth optimization should be the focus to attaining better performance.

Achieving Scalability Through Rollup-centric Designs

In the quest for improved performance, the industry is increasingly adopting the strategy of off-chain execution. These strategies, rooted in the concept of horizontal scalability, aim to boost the system’s throughput by distributing the computational load across 'parallel' chains. While there are many approaches to this technique, they primarily fall into two main categories. Some projects, like Cosmos and Avalanche, have adopted a fragmentation approach which involves dividing the system into multiple independent blockchain networks, each with its own consensus mechanism and Consensus nodes. Conversely, other projects like Ethereum and Polkadot, have chosen a centric approach. This strategy focuses on maintaining a core ‘superchain’ (also referred to as the Coordination chain, Relay Chain, Layer 1, or even Layer 0 depending on the actual architecture) that acts as a single, shared source of finality for all dependent sub-networks (often referred to as Layer 2’s or Rollups). We argue that only the second approach provides a compelling solution.

While the fragmentation approach theoretically improves scalability by distributing workload, it works under the assumption that all network states are autonomous. Each state has its own finality, with no source of unified finality across all networks. This, along with the lack of homogenous security among them, stemming from each new network having a different Consensus node set and therefore a different security model, increases systemic risk as more chains are interconnected. Indeed, as observed in practice, the security of one chain often do not match the security of another. This considerably reduces the potential for composability due to the compounding of additional trust assumptions during inter-network interactions. Notably, the deployment of each individual network demands a significant amount of time and resources for developers. And lastly, fragmentation does not optimize for bandwidth since each of these networks requires its own distinct consensus process for finality, which implies that the bandwidth per load remains constant (assuming a constant security level). Therefore, despite all the tradeoffs it introduces, this architecture doesn't really overcome the previously discussed limitations.

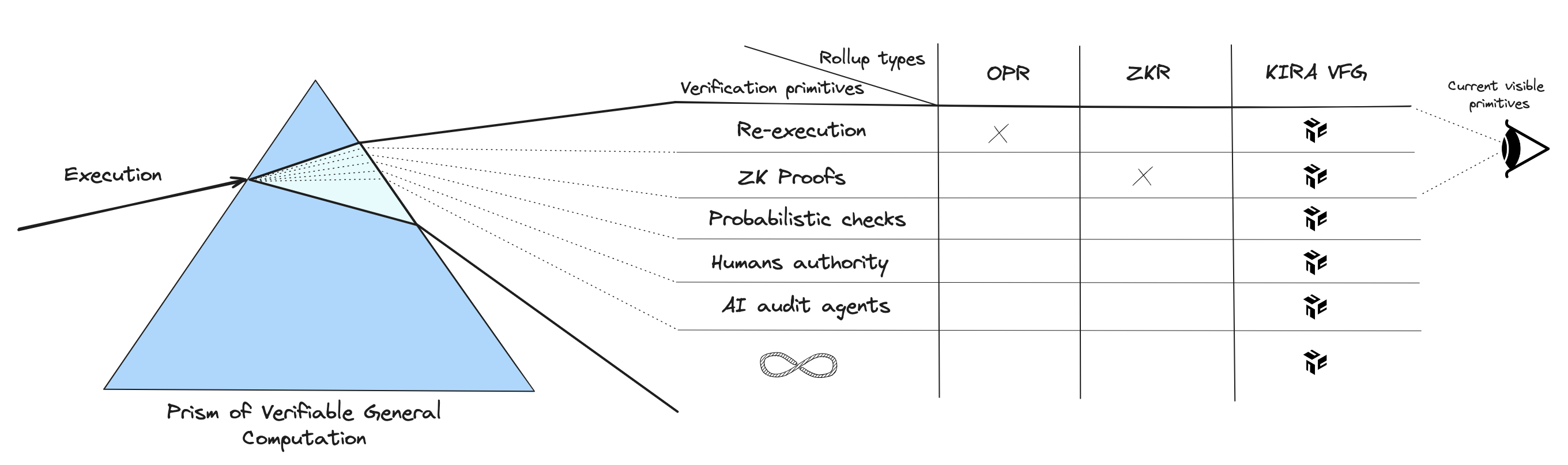

In the centric approach however, the consensus of the ‘superchain’ and the execution of its rollups applications are separated. This allows the chain to focus on providing security and finality without having its consensus burdened by resource-intensive applications. This modularity offers a significant advantage compared to the fragmentation approach since rollups don't need to undergo heavy consensus processes to achieve finality. Instead, they can use various other verification methods that validate the correctness of computations by anchoring themselves in the security and finality provided by the ‘superchain’. This opens up the opportunity to experiment with different verification methods that don't necessarily depend on large number of operators, have lower time to deployment and enhances cost-efficiency from of bandwidth per load perspective. Most importantly, this flexibility benefits rollups applications as they can customize these methods based on their unique needs and trade-offs.

Taking it a step further, KIRA has devised a unique approach where Consensus nodes also shoulder the responsibility of hosting L2 applications, which includes managing Data Availability (application’s arbitrary data as balances are directly settled on-chain). This considerably reduces the bandwidth requirements as the necessary data to advance the state of the application is physically proximate to the nodes that require it. A stark contrast to classical modular systems where data is shuttled back and forth between various external modular networks such as Data Availability, decentralized Prover or Sequencer chains, that might be geographically far apart, thereby unnecessarily increasing bandwidth usage and inducing latency in the system. In this sense, KIRA embodies a hypermodular network, an architecture that reduces unnecessary overhead, complexity, and dependency risk by ensuring all essential modular components are readily accessible.

Harnessing the Extensive Capabilities of General-purpose Rollups With KIRA

Programmable Finality With the Virtual Finality Gadget

Rollups have significantly advanced blockchain scalability by enabling more powerful and cost-effective decentralized applications. However, the current existing solutions treats execution and verification as inseparable elements. This conflation requires applications to be designed around one specific verification method, such as Optimistic Rollups with fraud proofs or ZK-Rollups with validity proofs. Committing to a single verification scheme limits rollup applications' adaptability and ability to keep up with evolving verification technologies. They could potentially be tied to a method that becomes outdated or less efficient over time, leading to a possible waste of considerable development time and financial resources. Moreover, the approach substantially restricts the scope of compatible logics, given that rollups are unable to harness the benefits of utilizing diverse methods collectively or interchangeably. The reason behind this limitation is rooted in the nature of general computation where not all code strictly adheres to "logical" and deterministic patterns, hence it cannot be fully verified using a single method. A program may encompass both deterministic and non-deterministic logics, even a Blockchain (e.g. bugs and hacks). Real-life scenarios and code are not always mutually exclusive (e.g. video games), and high-level applications are not impervious to real-world alterations – Bitcoin being no exception. Rather than being constrained to a single verification scheme, General-Purpose Rollups (decentralized general computation applications) should be able to harness diverse verification methods to express their full potential. For instance, while Risc0 employs validity proofs for computations—a process that can be relatively slow—it should ideally serve as just one component within a larger system. This allows the overarching system to leverage a variety of verification methods tailored for its respective subsystems as necessary, rather than being entirely dependent on the constraints of Risc0.

Building upon the concepts of rollups and the idea of modular execution, KIRA introduces the Verifiable Finality Gadget for its Layer 2 applications (also referred to as RollApps). This approach distinguishes execution and verification as two distinct aspect of a verifiable general computation system. Each RollApp is comprised of a single homogeneous Docker container, which is run by at least one or a group of KIRA’s Consensus nodes, known as Executors. This container operates a virtualized execution environment (application logic). Alongside it, there can be an arbitrary number of heterogeneous verification containers. These are run by both Consensus and non-Consensus nodes, which are referred to as Verifiers, who defines how changes in the application state is being verified (verification logics). This approach originates from the realization that various systems, whether they are blockchains, rollups or others, are fundamentally similar but encounter different constraints due to their unique verification rules (commonly referred to as finality rules). Most importantly, the execution environment is actually agnostic to the nature of these rules, meaning that the execution logic can remain unchanged even as methods of verification evolve. It is even agnostic to the type of hardware components such as GPUs or CPUs needed to perform the execution which implies it isn't limited by the hardware infrastructure of any individual Consensus node, allowing for diverse hardware configurations among different nodes. From customs Virtual Machines to ZK-Rollups, from Web2 applications to Multi-Party Computation Web3 applications, from an AI agent to a video game - any execution environment can be virtualized and verified using VFG. Concurrently, VFG enables the use of any combination of arbitrary methods for defining finality which can even incorporate human authority or non deterministic logic such as AI agents, with adjustments tailored to the specific security needs of the application, computational load, energy efficiency, and bandwidth usage. In essence, modular verification empowers developers to build complexe trustless application with maximum efficiency, and by doing so paves the way for General-Purpose Rollups to exist. Additionally, VFG liberates developers from the pitfalls of being tied to a single method of verification that could potentially become outdated. Just as the Ethereum Virtual Machine was eventually superseded by application-specific chains and rollups, it can be expected that current verification technologies, such as Risc0, might be replaced in the future. This is because in the realm of verifiable general computation, there is not just one solution; there are infinite.

Finality is a spectrum.

Achieving True Turing Completeness With Blockchainless Applications

In his graypaper, "JAM", Gavin Wood, the grandfather of the Ethereum Virtual Machine, identifies five critical properties that decentralized applications platforms must possess: resilience, generality, performance, composability, and accessibility. Among these, generality—defined as the platform's ability to perform Turing-complete computations—is an essential and widely accepted prerequisite for fostering innovation. Interestingly, Wood notes that despite the design of current smart contract platforms to support Turing Completeness, execution is, in fact, bounded. While these platforms do provide some memory space and a few computation cycles, these resources are quickly exhausted due to the inherent concept of 'gas'. In blockchain systems, 'gas' serves two main roles. It acts as a payment mechanism for memory usage and computation, and serves as a solution to the 'halting problem' by limiting the number of computations executed by a batch of inputs, essentially defining the computational capacity of a single block. This limitation is necessary given that blockchains, as a specific implementation of verifiable computation, rely on the re-execution of each block by a supermajority of nodes within a specified time. Without 'gas', a block generating unrestricted computations would not reach finality, potentially resulting in the halting of the chain's progress. This could occur due to issues like never-ending transaction execution, such as an infinite loops.

This gas model significantly restricts the type of applications that can be executed ‘on the blockchain’, as they cannot operate beyond or asynchronously from the limited block’s execution cycles. Despite commendable advancements in block speed or size, the inherent limitations of blockchain systems will always pose a significant hurdle to running complex applications such as AI or games. Blockchains, or as we may aptly term them gas-constrained virtual machines, are most suitable for overseeing assets transfers and coordinating metadata around business logic which is the core concept around which SEKAI is designed.

While VFGs offer a pathway for applications to set custom finality rules and move away from typical gas-constrained VMs, their execution remains session-based. This setup requires application builders to instantiate their own virtual machine environments or otherwise define their own state machine rules of execution to address inherent limitations, a task that can be quite complex. This is why the Ethereum VM is frequently reused in the industry, much like Bitcoin was forked to create early altcoins. KIRA provides a solution to these constraints by enabling applications to operate in a blockchainless environment. It allows applications to run asynchronously from its sessions. KIRA achieves this by allowing the applications Executor to continuously submit and update intermediate, non-final state hash (or state snapshots) during an application execution session. The next Executor can then pick up the work in real-time or execute in parallel to the current Executor, enabling the application to operate similarly to classic Web2 apps without being interrupted. Furthermore, non-Multi-Party Computation (MPC) scenarios, where only a single Executor is needed, result in a substantial boost in application performance, pushing the boundaries of what has been achievable to date. In essence, the realization of general-purpose rollups relies on the synergistic combination of the flexible finality rules offered by VFGs and the full expressivity of Blockchainless Applications which, due to their asynchronous capacity, can achieve true Turing completeness.

Any execution environment can be made verifiable using VFG, including SEKAI, which might one day evolve into its own Layer 2, eliminating the need for its current consensus mechanism, Tendermint.